Collateral Damage in the War Against Online Harms

A summary of this report is also available on Top10 VPN’s website here.

Table of Contents

- Key findings

- Introduction: Why are websites being blocked in the UK?

- PART ONE: The policy context

- PART TWO: Understanding our Blocked.org.uk data

- PART THREE: Research Findings

- Problematic categories of blocks

- Content misclassification

- Domestic violence and sexual abuse support networks

- School websites

- LGBTQ+ sites

- Counselling, support, and mental health sites

- Wedding sites

- Drain unblocking services

- Photographers

- Builders, building supplies and concrete

- Religious sites

- Charities and non-profit organisations

- Alcohol-related (non-sales) sites

- The “Scunthorpe Problem”

- Blocks which cause damage at a technical level

- Overbroad blocking categories

- Products already subject to age restrictions

- Cannabidiol products blocked as drugs

- Commercial VPN services

- VPN and remote access software

- Blocks are not being adequately maintained

- Unblock request findings

- Replies to unblock requests

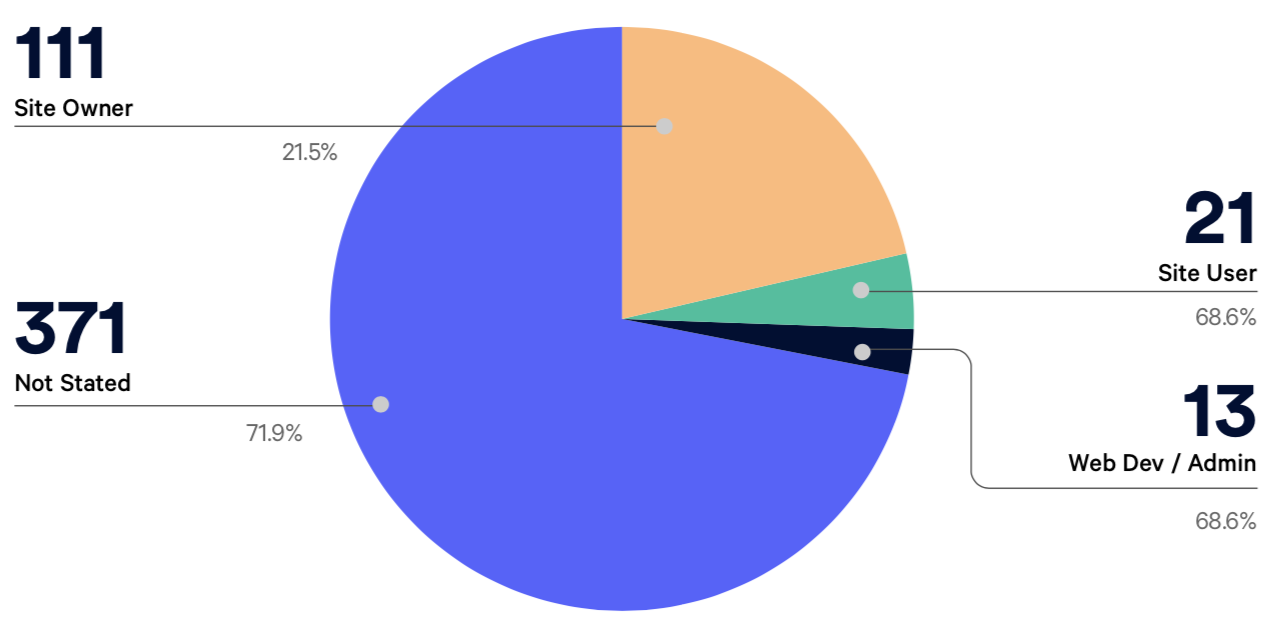

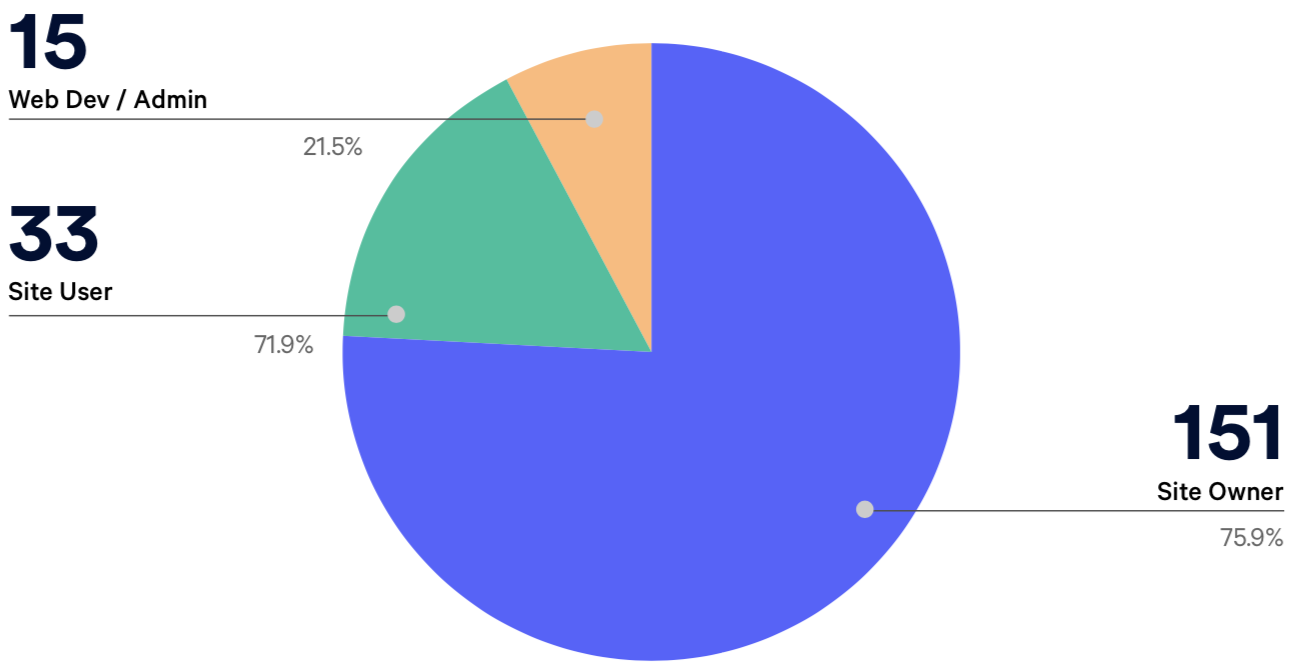

- Sources of unblock requests

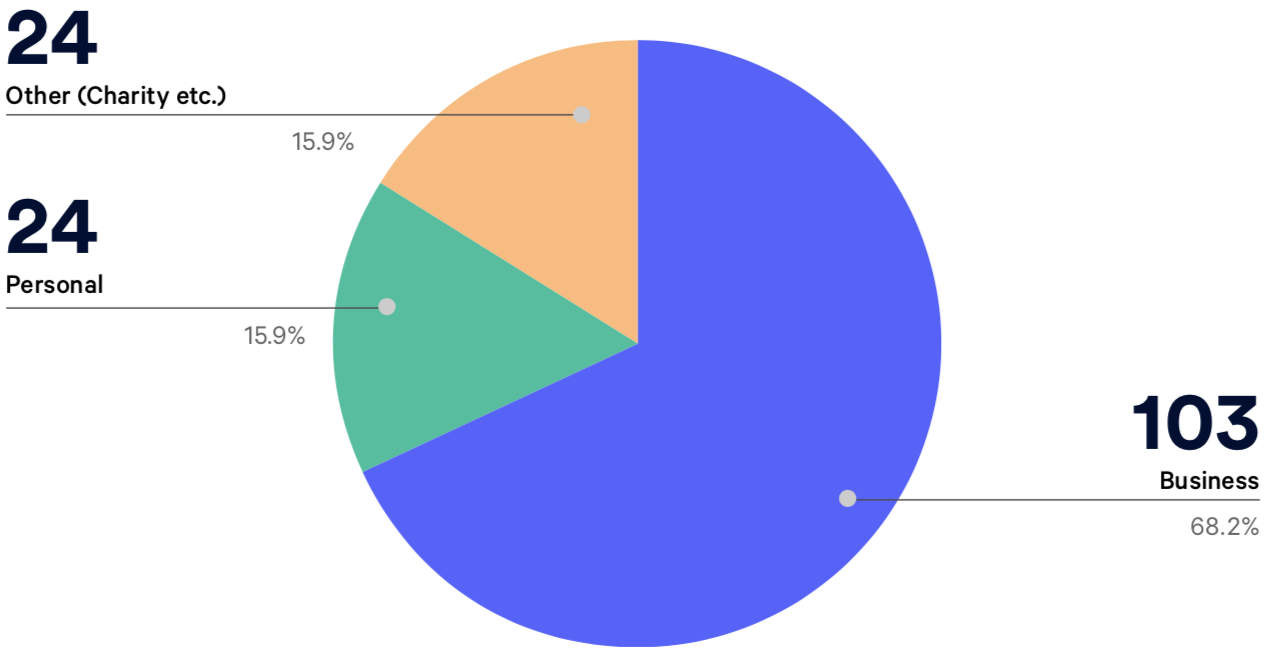

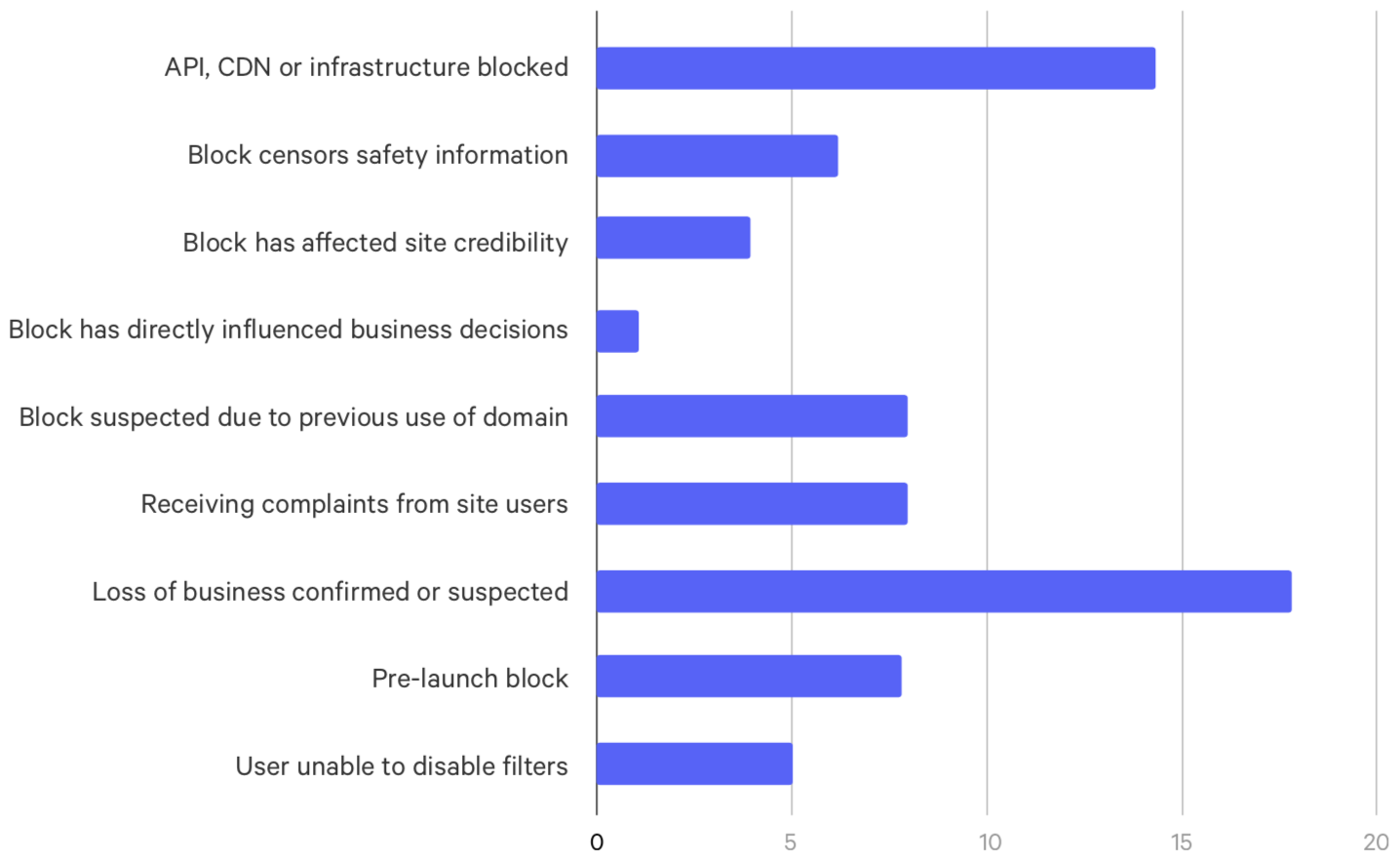

- Damage cited by Blocked.org.uk users

- Mobile network inconsistencies

- Complaints and appeals

- The future of web filters

- Conclusion

- Appendix A – Raw Data

- Table A – Keyword list categories

- Table A.1 – Keyword list categories

- Table A.2 Verified search results, UK sites only

- Table A.3 Unverified search results

- Table A.4 Unverified search results, .uk domains only

- Table B – Unblock request categories

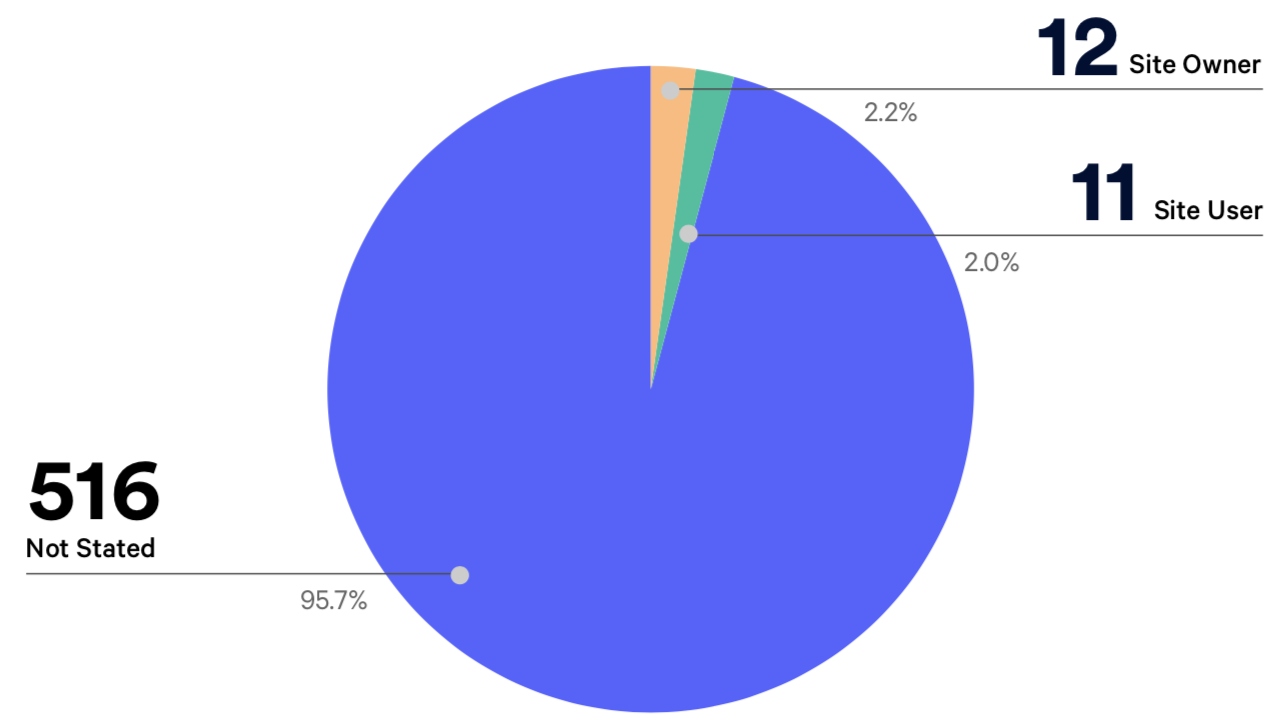

- Table C – Unblock requests categorised by user affiliation

- Table D – Breakdown of damage alleged by users submitting unblock requests

- Table E – Unblock requests forwarded to each ISP

- Table F – ISP reply statistics (aggregate)

- Table G – ISP reply statistics (per-ISP)

- Table H – Mobile network blocking inconsistencies

- Table A – Keyword list categories

- Appendix B – Methodology for reporting statistics

- Appendix C – Technical challenges from filtering products

- Appendix D – Bibliography

Key findings

The research in this document looked at the 1881 unblock requests which have been made through our Blocked.org.uk tool since 20171. The tool helps people ask Internet Service Providers (ISPs) to remove wrongfully-included websites from their adult content filters. As part of the Blocked project, since 2014 we have indexed over 35,000,000 websites, creating a database of over 760,000 blocked websites, allowing users of the site to search and check domains which they feel may be blocked.2

Scale of errors

-

While ISPs and the Government have downplayed the significance of errors, we have seen over 1,300 successful complaints forwarded to ISPs about incorrectly blocked domains.3

-

Further analysis of the blocked domains in our database suggests that this is only a fraction of the errors present. For instance, while we have only received 122 requests to unblock counselling and mental health websites, a simple keyword search of the database shows over 112 more that may still be wrongfully blocked. 4

What gets blocked

-

There is a great deal of useful and important material that is being blocked. Even on ISPs estimations, thousands of errors are made.

-

Some blocking is difficult to understand: over 1,700 wedding services’ sites may be incorrectly blocked as of the time of writing, and over 730 sites relating to photography.5

-

Many local pubs’ websites are blocked, yet other bars or restaurants do not necessarily get blocked.

-

A set of simple searches returns thousands of blocked sites that urgently need review. We believe many more errors can be found.

Evidence from errors reported to us

-

98 reported sites for counselling, support, and mental health services have been unblocked.6

-

Over 55 charities or non-profit organisations have had their websites unblocked through our tool.7

-

At least 59 sites dedicated to domestic violence or sexual abuse support have been blocked over the lifetime of the Blocked tool. 14 of these are still blocked.8

-

LGBTQ+ community sites are often incorrectly blocked, with users having reported 40 though the Blocked site.9

Complaints made through Blocked.org.uk

-

An increasing number of complaints are made by site owners and users. In 2018, we saw more than 25% of unblock requests coming directly from site owners or users.10

-

Site owners often complain about wrongful blocks causing business damage and reputational issues.11

ISP responses

-

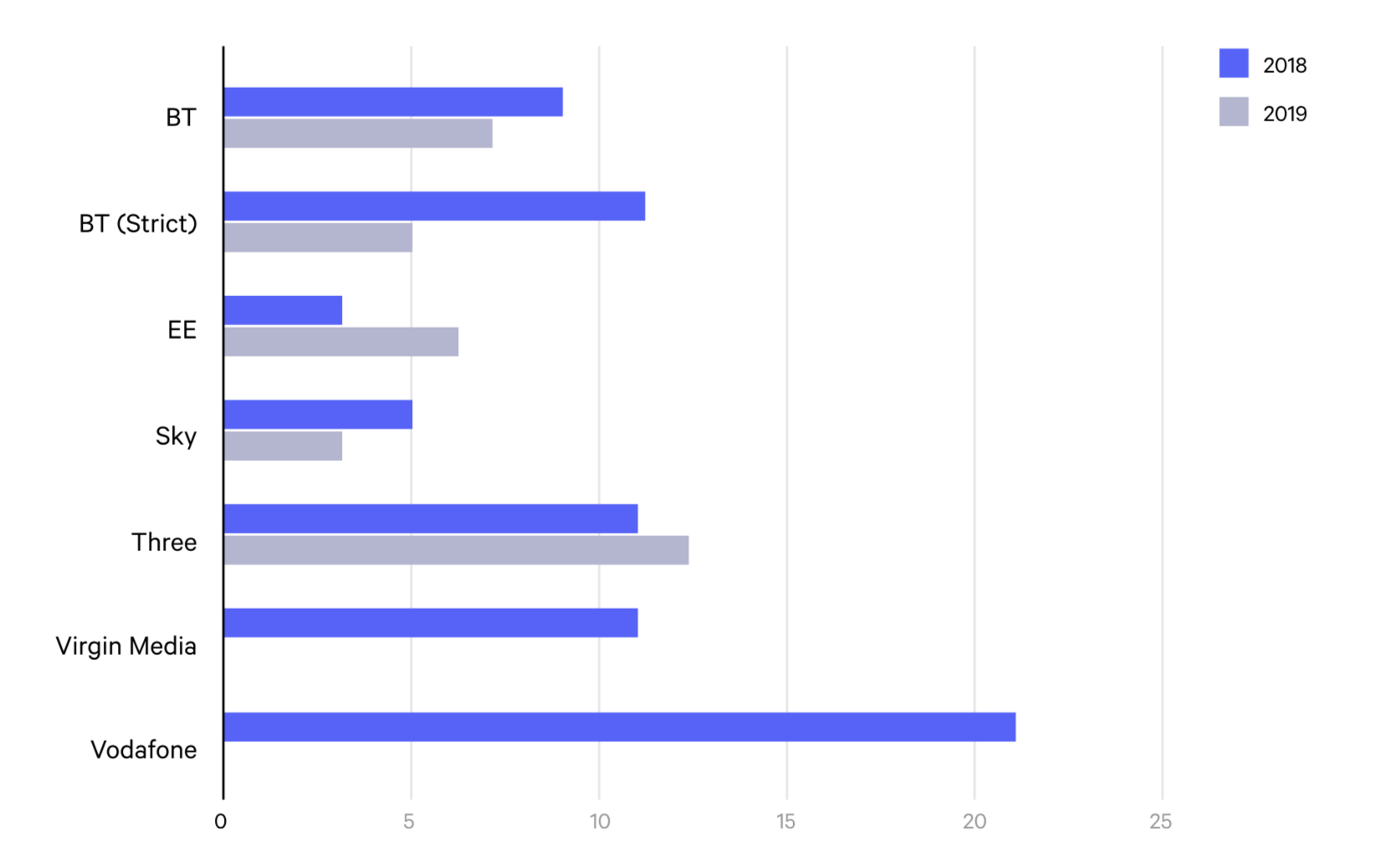

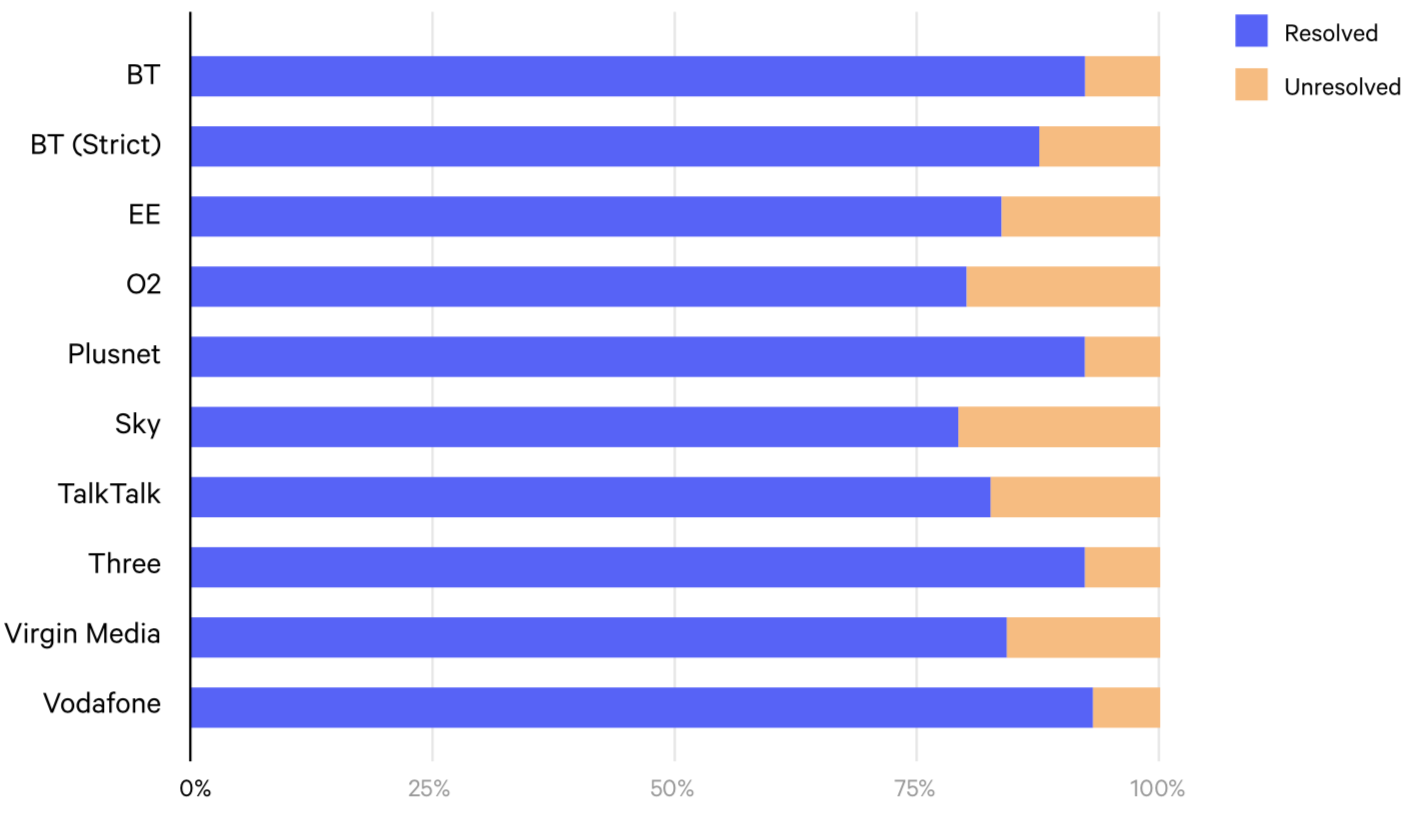

While some ISPs respond to complaints reasonably quickly, others are slow. The average was 8 days in 2018. Vodafone took 21 days on average to respond to our requests. Virgin Media and Three took 11 days.

-

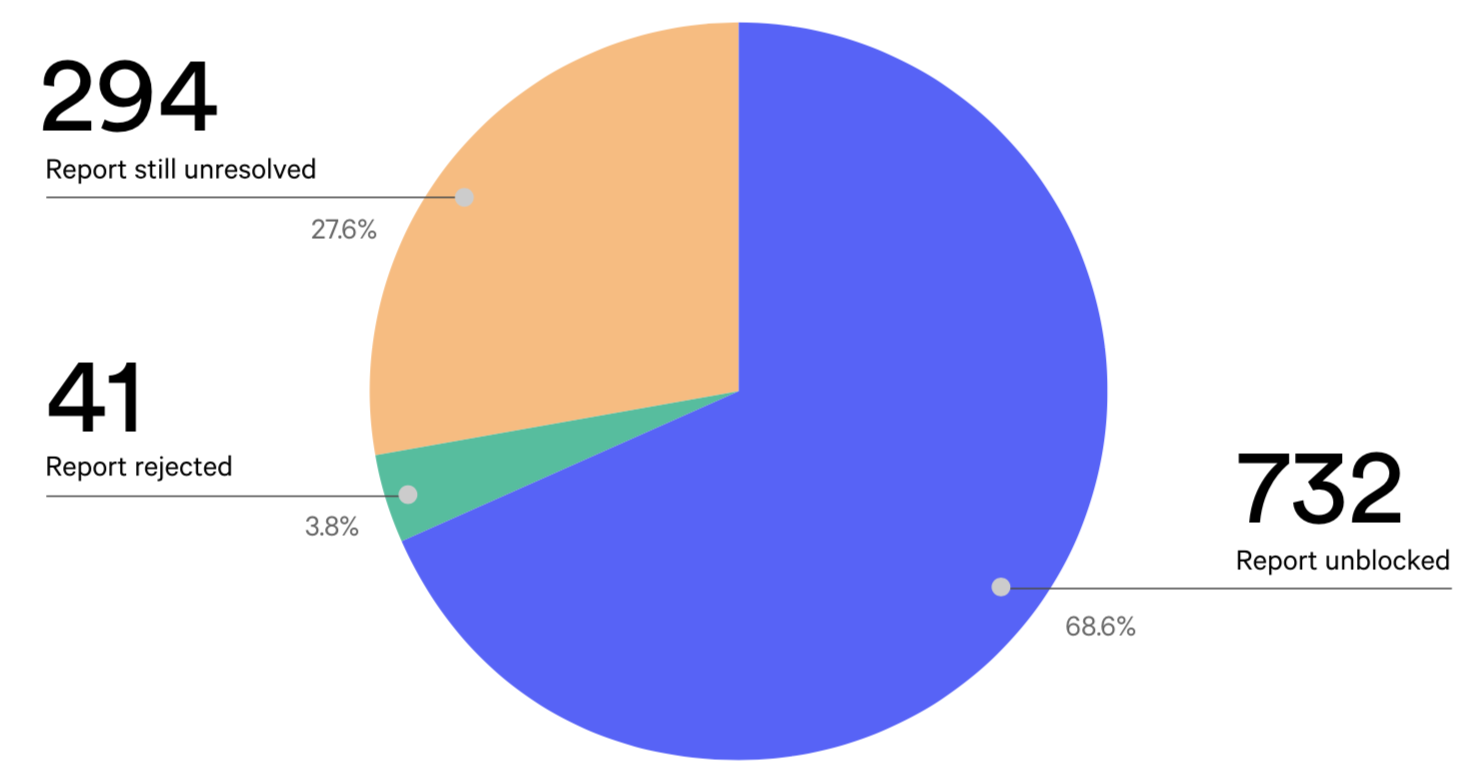

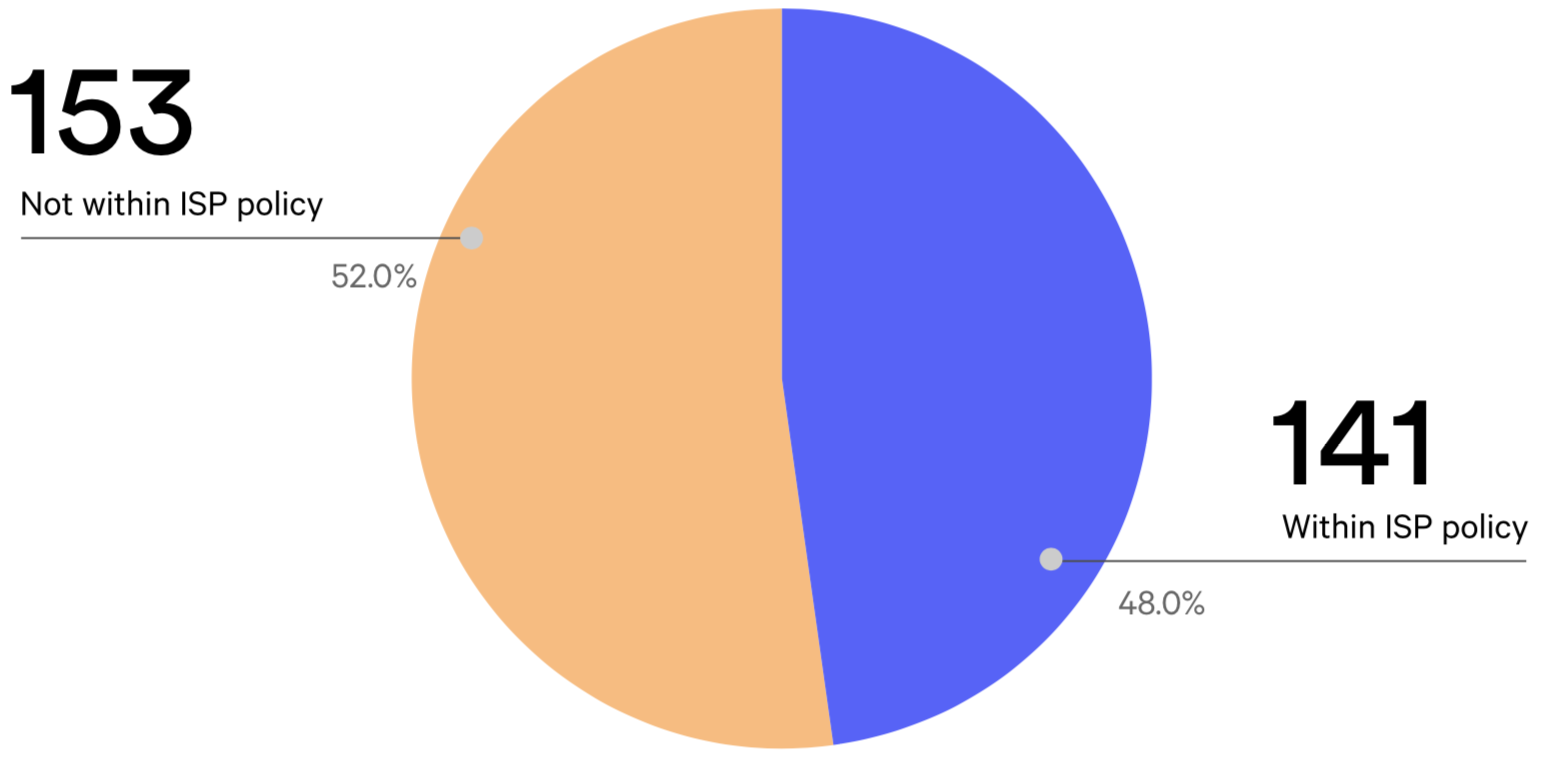

A significant proportion of requests go missing. In 2018, 294 of 1,072 reports to networks went missing, or 27%, of which we believe 153 (15%) should have resulted in a URL being unblocked.12

-

ISPs often do not reply to complaints, even when they do remove the block.

Appeals and errors

-

Only mobile networks offer appeals. However these are seldom used.

-

Mobile networks do not properly apply policy changes from the British Board for Film Classification (BBFC) rulings. The BBFC made it clear the VPN providers should not be blocked, yet we have identified around 300 VPN-related websites that are blocked and need review, and requests from VPN providers are not usually resolved favourably without a further appeal to BBFC.13

-

For fixed networks, no appeal is possible. Within our complaints, we believe at least 39 reclassification errors were made by ISPs after unblock requests were submitted.

Introduction: Why are websites being blocked in the UK?

Since 2011, ISPs in the United Kingdom have applied filters to Internet connections in an effort to block children and young persons from accessing websites which host content considered inappropriate. This push was informally backed by the Government, who wanted to show that the UK was at the forefront of protecting children from online content. In 2013, the then Prime Minister David Cameron declared: “I want to talk about the Internet, the impact it’s having on the innocence of our children, how online pornography is corroding childhood and how, in the darkest corners of the internet, there are things going on that are a direct danger to our children and that must be stamped out.”14

In the same speech, Cameron announced that the four main ISPs in the UK; TalkTalk, Virgin Media, Sky and BT, had agreed to install ‘family friendly’ content filters and to promote them to their existing and new customers.

A previous report by Open Rights Group (ORG) had shown that there were serious problems with filtering by mobile phone companies, which were incorrectly blocking many websites.15 We suspected, correctly, that there would be similar issues with network level filtering by broadband ISPs.

Overblocking has been dismissed as trivial, and ISPs cite low numbers of websites now reporting blocks. We would argue that this is not a sufficient measure of overblocking because the vast majority of website owners do not suspect that their site will be blocked. ORG’s Blocked tool, created to identify blocked sites, gives a more accurate picture of the scale of the problem. UK websites are being incorrectly blocked in their thousands, and this includes sites that provide help and advice for young people and other household members. We have also found examples of significant under-blocking, where inappropriate content is not being blocked by filters.

There is no evidence that filters are preventing children from seeing adult content or keeping them safe online. They may be contributing to a lack of resilience that can increase risk to children.

Private companies are making questionable choices about what is and is not acceptable for under 18s, with no oversight or consideration of actual harms to young people.

Following the passing of an EU regulation on net neutrality, the position seems clear that Internet filtering by ISPs is prohibited. This is a regulation that was supported by the UK Government, in the full knowledge that it would have implications for ISP-level content restrictions, and the significant investments that the Government demanded from them.

In the short term, we would urge companies to ensure that customers are, at a minimum, being given the option to opt in to filtering, as well as sufficient information for this to be an informed choice. ISPs should move customers to independent, device level products that can be focused on a child’s needs. Ofcom need to clarify the legal situation for UK network operators as the regulator responsible for the Internet access regulations.

As this report shows, filters are a flawed technical solution to a social problem. As with other areas of social policy, we would urge the Government to embrace a more long-term and holistic approach to promote lasting online safety.

This report looks at the impact of filters applied to prevent young people from seeing content that is believed to be unsuitable for under 18s. It looks at why filters were introduced, how they work and the kind of content they aim to block.

Using evidence from www.blocked.org.uk, a tool created by ORG and ORG supporters, we show evidence of over-blocking and under-blocking by filters. We look at the complaints process and error handling. In this report, we outline recommendations for greater transparency and consent with regard to filters, as well as other suggestions for keeping children safe online.

This report shows the dangers of poorly-designed policy, and of reliance on technology to police online content. The UK Government and the European Union continue to push technological solutions in this area, for instance in Article 13 of the Copyright Directive, the Terrorism Directive, and the UK’s Internet Safety Strategy. However, technology has limits, and ultimately cannot substitute for human review. Furthermore, all systems cause errors. Error detection is often absent or limited, and it is left to individuals in these systems to report problems. Inevitably, mistakes are often not reported, and even when they are, they are not always resolved.

PART ONE: The policy context

What problem are content filters trying to solve?

The Internet has opened up new worlds to children, who now have unprecedented access to information and ideas, and the means to communicate them. While there are clearly huge benefits, using the Internet carries risks for children and young people, including accessing age inappropriate content. For a number of years, children and young people seeing pornography online has been a major concern for politicians, publications such as the Daily Mail and children’s charities like the NSPCC.

Concerns around content range from young children being upset after accidentally being exposed to adult images, to worries that the excessive consumption of pornography is affecting how young people view sex and relationships. A 2016 report into sexual harassment and violence in schools by the House of Commons’ Women and Equalities Committee said that, “Widespread access to pornography appears to be having a negative impact on children and young people’s perceptions of sex, relationships and consent. There is evidence of a correlation between children’s regular viewing of pornography and harmful behaviours.”16

The media debate initially tended to focus on pornography17, although there has been discussion about the harms caused by forums and websites that promote anorexia, self harm and, extremism. However, as we explain in more detail below, the adult content filters currently being applied in the UK by ISPs and mobile phone providers actually cover a much wider range of subjects, including alcohol, drugs, sex, religion, and politics. This is particularly problematic because filters are applied to adults as well as children, either as ‘whole home’ solutions provided by ISPs, or as ‘default’ filters on phones which require effort to remove.

Fears about the corrosive influence of certain types of content, whether music, film or games, have been discussed for years, both in the media and at a policy level. However, Internet saturation and the rise in the use of tablets and smartphones, means children can now access content to the exclusion of their parents or other adults in a way that was not previously possible. A Plymouth University report on peer education and online safety, notes: “Combine the feeling of exclusion with the Internet safety messages based on a risk-laden environment, and there surfaces an assumption that children are engaging in unsafe activities.”18

According to the Internet Matters website: “Our … Pace of Change Research (2015) shows that 48% of parents believe their children know more about the Internet than they do and, and 78% of children agree.” This has undoubtedly contributed to sense of powerless and concern felt by parents, and to an extent MPs and the media. This has, in turn, shaped the debate and proposed solutions for keeping children safe online. A United Nations report into free expressions noted: “The limited understanding of children’s use of the Internet frequently leads to the adoption of more restrictive approaches aimed at safeguarding children.”19 How we approach the issue of harmful content may change as digital natives become parents themselves.

Thanks in part to ORG’s awareness raising, the Government accepted overblocking is a problem and initially set up a task force to deal with it. Originally an independent body, the task force was subsumed into the UK Council for Child Internet Safety (UKCCIS), which listed as one of its achievements “considering potential problems around overblocking”. We observed little action, though, despite our attendance at most of their overblocking meetings.20 The working group was specifically tasked with ensuring that:

ISPs develop and implement a single, centralised process for site owners to check the status of their site and report cases of suspected overblocking.21

This resulted in a single email address being presented on Internet Matters, and made available only to website owners. The UKCCIS ‘overblocking working group’ reported at its conclusion in 2015 that:

To date, no webmasters have reported that the ISPs are overblocking their websites via Internet Matters, and a similar low level of activity is reflected in the data from the UK mobile operators (EE, O2, Three and Vodafone), which is published quarterly by the BBFC since September 2013 in conjunction with the Mobile Broadband Stakeholder Group.22

This stands in contrast with the evidence in this report, of hundreds of complaints filed through our tools and thousands of likely misclassifications shown through our searches. We suspect the truth is that complaints via ISPs and Internet Matters are so low because:

-

Many website owners do not know about filters and do not suspect that their sites are blocked. In our experience, charities are more aware of filters but many businesses, organisations and individuals have no idea that their website can be blocked.

-

Many of the sites being blocked have low levels of traffic so the error may not be easily noticed.

-

Many of the people who have got in touch with ORG found out by accident that their site was blocked.

-

People do not understand the implications of being blocked – especially if their site is blocked on a network other than their own.

-

Apart from the Blocked tool, there is no way of checking whether sites are blocked across all ISPs and mobile phone providers.

-

If website owners discover their site is blocked, they may not know that they can challenge this decision or how they should go about it.

Harms resulting from blocks was also examined by the Group, particularly as content relevant to children such as advice sites might be blocked. The Overblocking Working Group agreed that “just … ChildLine and other emergency support for young people, including their individual forums” would be whitelisted to ensure children could find help. However, blocking of content relevant to children was not addressed, nor was the possible scale of blocking of help sites. We have found this to be a particular problem in our research.

Being blocked by adult content filters can also cause real problems for business owners or other people who make a living through the content on their sites. As we illustrate later in this report, overzealous web filtering can lead to businesses and website owners losing out on potential customers and revenue, as these potential customers find themselves unable to access the business website and instead take their business elsewhere.

Further, blocking sites belonging to legitimate businesses and groups has the knock-on effect of potentially causing lost sales, and may lead to potential site visitors trusting the judgment of the filtering systems and wrongly assuming that the site hosts unsavoury content.

The harm done to businesses and blocked sites by these filters is exacerbated by the fact that users are not always empowered to choose whether their filters are on or off. Filtering can always be toggled by users, however not all users are aware that filtering is enabled for them by default, or asked whether they want to enable it. This leads to a natural expansion of filtering to people who did not need or ask for it, such as those without children or who live only with children over 18.

These adult content filters can be disabled of course, but this does not counter the potential problems that they may cause. There are a multitude of reasons why Internet users may be unwilling or unable to disable adult content filtering:

-

Users may not be the bill payer (shared household, public Wi-Fi, etc);

-

Users with young children may wish to keep the filters enabled;

-

Users of mobile networks may be unwilling to submit the required ID documents to disable the filters.

Wrongful blocks can be circumvented, but a business who is impacted by a block faces the loss of traffic to their site from people who do not have the time to work out how to bypass a block, turn off filtering, or simply from people who trust the filtering systems to be accurate who assume that if the filter blocks a site then it must be somehow problematic or malicious.

Assessing the harms of adult content

ORG campaigns to protect the right to privacy, free expression online and to challenge mass surveillance, it is not within our remit or expertise to assess the impact or potential harms of pornography or other adult content on children and young people. Our remit is to consider the impact of policies on the free speech and privacy of web users, including children, parents, and website owners.

However, we would urge that the policy debate around the harms caused by content:

- Takes an evidence-based approach

Policies must be informed by independent research into the various strategies for keeping children safe online. Surveys of attitudes can reflect what parents and children think, or what they think society believes they ought to think, rather than being objective measures of harm. This does not mean that they are not of value, but their limits must be recognised and they should not be the sole driver of policy.

- Is framed correctly

Filters do not just block pornography but a wide range of content, using broad categories such as alcohol, drugs, sex and extremism. It is important that this is recognised in any discussion of filters’ impact on young people.

- Includes young people’s voices

While children are asked about their concerns and fears, they are rarely asked about the solutions. Dr Andy Phippen from Plymouth University told ORG,

“I work a great deal with young people and what I am struck by is the majority of ‘online safety’ education is the prohibitive approach – don’t do this, don’t look at this, etc. What they tell me they need, rather than tools to stop them doing things (which they know don’t work), are safe spaces and knowledgeable staff to allow them to discuss these issues in a sensible manner. We are never going to prevent access to pornography by determined teens, so they need to understand the impact of access and the wider cultural issues”.

- Does not solely focus on risk

Many of the resources available to children, teachers and parents focus on the risks posed to children by the Internet and the exclusion of parents and other adults in being able to protect them from this.23 Aside from the fact that it is impossible to wholly eliminate risk, technology-focused approaches to ensuring child safety online often fail to consider the potential risks they themselves may pose to children’s rights. As part of a series of reports on “Children’s Rights and Business in a Digital World”, UNICEF highlighted that:

“current public policy is increasingly driven by overemphasized, albeit real, risks faced by children online, with little consideration for potential negative impacts on children’s rights to freedom of expression and access to information. The ICT sector, meanwhile, is regularly called on to reduce these risks, yet given little direction on how to ensure that children remain able to participate fully and actively in the digital world.”24

- Does not focus solely on technology

We are supportive of the Government’s 2017 commitment to introduce compulsory sex and relationships education (SRE) in schools. We feel that it is important for children to receive SRE which addresses topics such as pornography, relationships and online abuse. Children also need to be educated about how to stay safe online and what they can do if they encounter content that they might find disturbing or frightening.

- Encourages active parenting

This may mean actively monitoring the use of young children and talking to older children about the dangers they might encounter online. Parents already do this on a daily basis – whether it is discussing the news in age-appropriate terms or educating their children about alcohol, drugs and sex. Promoting device-level filters rather than ISP-level filters may also help parents to take a more active role in their child’s Internet use, and can assist parents to have more granular control over what their children are able to see online, rather than outsourcing such decisions to Internet Service Providers.

The 2010 coalition Government identified tackling ‘the commercialisation and sexualisation of childhood’ as one of its commitments.25 The subsequent 2011 Bailey Review into the commercialisation and sexualisation of childhood recommended that parents should be given an ‘active choice’ about applying filters when they bought a device or entered into a contract.26 Bailey recommended that companies offer parental controls voluntarily, or if they failed to comply within a timescale, through regulation. In 2013, the coalition Government persuaded the UK’s four largest Internet Service Providers (BT, TalkTalk, Sky, and Virgin Media) to fulfil this recommendation and make network-level filters available to their customers.

Network-level filters have been promoted as a simple way of preventing children from seeing adult content. Parents do not need any technical expertise to activate them. Former Prime Minister David Cameron said filters were intended to provide “One click to protect your whole home and to keep your children safe.”27 As this report will show, this simplistic view is misleading and potentially counterproductive.

Filters – whether applied at home or in schools – have now become central to government policies for children’s safety online.

Mobile Networks

Mobile networks introduced ‘opt-out’ or ‘default on’ filters much earlier than fixed-line ISPs on the basis that it was hard to know who a mobile account was being used by. An industry Code of Practice in 2004 first established the general approach.28 Mobile ISPs started to introduce opt-out filters from around 2011.29

One immediate limitation of filtering Internet access for children in this manner is that network-level filtering applied by a user’s mobile ISP will only work when a user is using mobile data. If the user is connected to a WiFi network, any filtering will be done through that WiFi network rather than the mobile ISP.

Mobile phone filters are switched on by default by a number of providers, including EE, Telefonica (O2), Three and Vodafone. Mobile phone customers generally have to prove they are over 18 if they want to switch filters off. Some networks require the submission of identification documents, such as a passport, in order to allow the filters to be disabled.

Mobile phone providers use a framework from the BBFC to identify content that should be filtered. This means that all mobile phone providers should in theory be using the same criteria to decide whether sites are blocked, although data from the research we have conducted through our Blocked tool suggests that there are is still some variation from provider to provider.

Site owners who think or realise that their site has been blocked incorrectly can appeal to the BBFC, who publish quarterly reports on the outcomes. There is a unified appeals process for websites filtered by mobile ISPs, and transparency about decisions. While it is not perfect, and is not a legal process, it is better than the previous arrangement which lacked independent means to deal with errors.

Fixed-Line ISPs

ISPs agreed to install filters on home broadband connections following private meetings with MPs and policy makers. Initially, the proposals were for ISPs to offer their customers an ‘unavoidable choice’. ISPs would ask customers if they wanted filters, often blocking all other Internet access until a choice had been made.

Filtering on fixed-line ISPs began to roll out in 2014. Despite some concerns being raised that customers were not being given an informed choice, they did initially have an opportunity to decide whether to enable the filters. However, in December 2015, Sky announced that it would turn on filters by default for new customers.30 TalkTalk also announced in a blog that they would be activating filters by default until customers made a choice about whether to opt out (the blog is no longer available on TalkTalk’s site).31 However, when ORG met with TalkTalk in July 2017, they confirmed to us their customers are forced to make a choice when setting up. We believe there is a similar arrangement at Virgin Media.32

Network-level blocking means ISPs enable filters that apply to every device connected to a household network. They can only be switched on or off by the account holder. Most ISPs offer different levels of filtering and some allow customers to customise the categories they would like to be blocked.

Most ISPs offer different levels of network-level filtering for different age groups, but only one level can be active at any time. Some offer the ability for timed filters, which can be switched on or off automatically, depending on the time of day.33 Device-level filtering is also achievable without the use of ISP filters by using filtering software on individual devices, such as Net Nanny, McAfee Family Protection, or OpenDNS FamilyShield.

Each ISP outsourced the development of their filters to third party suppliers such as Symantec and there is no consistency or transparency about the criteria that these suppliers are using. Depending on where suppliers are located, there may be an inherent cultural bias about what is viewed as inappropriate for under 18s. There is a large discrepancy over which websites or categories of content each ISP filters. What is blocked by one ISP is not necessarily blocked by another.34 There is no definitive list of sites that are considered harmful for children nor it would seem are there any consistent criteria.

Each ISP also has its own complaints process. Some ISPs have indicated that their supplier decides what should be blocked and they have no control over it. For example, when contacted to request review of a site that is inappropriately blocked, we find that BT’s automated system issues a response that says:

“BT Parental Controls is conducted by our expert 3rd party supplier and BT is not involved in this process. … BT or its third party supplier will not enter into correspondence regarding this investigation.”

Staff do not always appear to be appropriately trained to deal with overblocking queries over the phone. One website owner we spoke to found that when she called Virgin Media to report the incorrect block, the customer services operative she spoke to refused at first to believe that there was no pornographic or violent content on her site. She was told to speak to her own ISP even though they were not blocking her site, and even advised to tell all her customers to disable the filters.35

Take up of ISP filters

When ISP filters were launched in late 2013, they were first offered to new customers. An Ofcom report showed that, six months later, the take up among new customers was relatively low:36

-

BT: 5%

-

Sky: 8%

-

TalkTalk: 36% 37

-

Virgin Media: 4%

Ofcom published a second report in December 2015, one year after ISPs’ existing customers were given an ‘unavoidable choice’ about filters. In January 2015, Sky changed its process so that if existing customers did not make a choice about filters, they were switched on automatically whether or not there were children in the household. Customers had to opt out if they did not want them.

ORG contacted each of these ISPs in 2015 for the actual numbers of households using filters. TalkTalk were the only ISP to provide figures. Using a combination of figures relating to broadband customers and the take up figures from Ofcom, we created rough estimations for the number of households that have active filters in the UK.

| ISP | Percentage of customers using filters38 | Number of households using filters39 |

|---|---|---|

| BT | 6% | 550,000 (estimate) |

| Sky | 30-40% | 2 million (estimate) |

| TalkTalk | 14% | 450,000 (confirmed by TalkTalk in July 17) |

| Virgin Media | 12.40% | 650,000 (estimate) |

We welcome corrections to these figures from ISPs. We would encourage ISPs to regularly publish up-to-date figures of how many households have adult content filters enabled. Website owners can get a better understanding of the impact of web blocking if they can see how many households will not be able to see their site if it is blocked.

More recent evidence from Ofcom states that around half of parents do not use filters, even when they are aware of them.40

Do filters prevent children from seeing harmful content?

We are not aware of research demonstrating that filters are effective in preventing children and young people from seeing harmful content. Researchers from Oxford University’s Oxford Internet Institute published a 2017 paper in the Journal of Pediatrics noting they had “failed to find convincing evidence that Internet filters were effective at shielding early adolescents from aversive experiences online” and, within their sample, found “convincing evidence they were not effective”.41

It can be assumed that filters will limit very young children’s ability to see pornography – whether searched for intentionally or deliberately – unless particularly adept at using technology. Filters do not block all pornography, there is still a risk that a young child could come across unsuitable content even if ISP filters are activated. This could be through mainstream social media platforms, such as Twitter42, or by actual pornographic sites that are not blocked by filters.43

Our research shows filters may block many pornographic sites and many sites that children are unlikely to be interested in anyway, such as wine merchants and breweries, but they are unable to protect children from individual pieces of content on sites like Twitter, Facebook and YouTube. In the modern encrypted era of the Internet, filters are an increasingly blunt instrument which can only restrict access to unsuitable content on the aforementioned platforms by blocking access to the entire platform.44 This renders ISP-level web filters ineffective as gatekeepers for what content children are able to view online.

YouTube in particular has been the focus of recent media attention concerning content children can view. While content on YouTube may not be pornographic by site policy, parents have raised concerns about children still being able to access “disturbing videos” on the platform, including on the YouTube Kids section of the platform which is designed to exclude content which is unsuitable for children.45 ISP-level filters are unable to take any effective steps to stem the flow of potentially unsuitable content on these platforms aside from, as already discussed, blocking entire platforms indiscriminately.

If technically adept children wish to view pornography filters are unlikely to stop them. Ofcom’s report into the strategies parents use to keep their children safe noted “there is broad agreement that all content filtering solutions are liable to circumvention by a dedicated and technically competent user, supported by a range of advice available online.”46

Parents are also aware of this. According to Ofcom’s 2018 Children and Parents: Media Use and Attitudes report, 15% of parents of children aged 5-15 said they thought their child would be able to bypass home network-level filters. This figure raises to one in five among parents of children aged 12-15.47

Professor Andy Phippen, professor of social responsibility in information technology at the University of Plymouth, argues that this is why young people appear to tolerate filtering and monitoring technology:

”they know [filters] don’t work and they know how to get around them. Filtering can be bypassed through proxies and personal hotspots, monitoring doesn’t work with encrypted communication, and location tracking can be disabled if you switch off your device or leave it with a friend!”48

Some of the ways that older children may be able to see pornography or other banned content include:

- Friends

They may access content through WiFi at friends’ houses where filters are not activated. Friends may also send pornographic content via WhatsApp or similar messaging apps that are not affected by filters. A Parent Zone report noted: “each child – however diligent their parents have been about filtering and monitoring on home broadband – is only as safe as their least-protected friend”.49

- Virtual private networks (VPNs)

VPNs allow users to access websites securely and anonymously. Devices contact websites through the VPN, bypassing filters or geo-blocking. These can be free or paid for. The VPN market is growing rapidly. The global mobile VPN market is expected to grow to $1.5 billion by 2023.50

- Proxy sites

Proxy sites act as intermediaries between computers and websites and files they want to connect with. They are often free and easy to use. Crucially, proxy sites are often encrypted, which means that ISP-level adult content filters are unable to block sites which users access via a proxy.

- Tor

Tor is free software that allows people to use the Internet anonymously, without filters being able to see what sites are being visited, or block traffic to sites which are on the block list. Internet traffic from Tor users is routed through a series of nodes run by volunteers.

- File sharing services

While file sharing sites may be blocked, it is harder to stop file sharing services with filters.

- Data storage

Young people may use CDs or other offline media, to circulate pornography or other material such as shared music or films, much as was commonly done before streaming video and large images became easy to download due to increased Internet bandwidth.

- Sexting

Young people may also create their own pornographic images and share them, commonly known as sexting.

Harms of overblocking

Young people and other household members

A 2014 UN report on free speech noted that:

“The result of vague and broad definitions of harmful information, for example in determining how to set Internet filters, can prevent children from gaining access to information that can support them to make informed choices, including honest, objective and age-appropriate information about issues such as sex education and drug use. This may exacerbate rather than diminish children’s vulnerability to risks” 51

Even if only a small proportion of websites are incorrectly blocked, there can still be significant consequences. For example, we tested around 9,000 Scottish charity websites and discovered that around 50 of them were blocked by one or more ISPs.52 This was a small proportion, but a number of these sites were designed to reach out specifically to young people in a crisis, including the Different Visions Celebrate project in Dundee that works with under 25’s who have questions about their sexuality, and Glasgow’s Say Women project, which offers services to young women who have survived rape, abuse and sexual assault.

Adults can also be affected by erroneous Internet filtering – for example people attempting to access domestic abuse, rape counselling or other crisis services. Websites are often the first point of contact for such services. If people are prevented from using them, it may prevent them from getting help.

Brian Cowie, Manager and Senior Recovery Support Practitioner at Aberdeen-based Alcohol Support told STV:

“These filters are ridiculous. How are we supposed to get people help and into therapy when they need it if they can’t get through to us?

“There’s no way that I’d want anyone to be unable to reach us. The most important thing nowadays is not just for people seeking help with their alcohol problems to be able to seek help – it’s also for their families and their children to be able to access support as well.” 53

These are genuine harms that cannot be dismissed by the fact that erroneous blocking may only lead to a small number of organisations being filtered.

Harms of overblocking for businesses

Websites are essential to modern businesses and overblocking can have a serious impact. This is especially true for small businesses, who our research shows appear to be more likely to be blocked than larger ones. Small businesses are also less likely to have the kind of wide customer base that would enable them to discover very rapidly from customer feedback whether particular service providers were filtering their sites. Larger businesses are more likely to be empowered to discover erroneous blocks more rapidly and work to get them corrected.

A number of specialised wine merchants have experienced their sites being blocked by the filtering systems. We do not see the same outcome for supermarkets selling alcohol and stocking the same products. This is despite the fact that both arguably pose the same potential harm to minors.

Many of the small businesses that have contacted us note that they cannot rely on their potential customers having awareness of their brand. If their site is blocked, customers will assume that there is something dubious about it. A number of business owners who have submitted reports via the Blocked tool have indicated that their site being blocked impacts the credibility of their business.

As Rebecca Struthers, whose watchmaking business was blocked by Virgin Media, puts it:

“As a small watchmaking business, we don’t have 200-300 years of reputation that a more established company has. If customers can’t get onto the site, it flags up that there is something fraudulent, which reflects badly on us. They will assume there is something wrong with our website not the filters – they are more likely to trust BT or Virgin [Media] than a small business like ours.” 54

The small businesses that have contacted ORG have understandably been very concerned about the financial damage caused by blocking. As Amy Leatherbarrow who ran a ladies’ dressmaking agency that was blocked by Sky and O2 told us:

“Who knows how many customers have encountered this and potential sales we have lost? We also offer a re-selling service for our clients which will have been affected. Our website is so important in our advertising and marketing and this issue is devastating as a business owner.

Philip Raby who runs a Porsche consultancy told us,

“we must have lost some business and, of course, it doesn’t look great telling people the site is not suitable for under 18s!” 55

Most of the small businesses that contacted us discovered that their site was blocked by accident. Why would dressmakers, watchmakers or Porsche dealers suspect their sites were blocked? We think that the overblocking of small businesses is a significant problem. ISPs and mobile phone providers need to be more proactive in raising awareness of this.

Harms of overblocking for free speech

Plans to stop children from seeing pornography online have been extended to a much wider range of content deemed ‘adult’. As well as leading to overblocking, this also results in companies being required to make decisions about what is, and is not, suitable for children. It is unclear that they are in a position to do this. As examples given in the case study on alcohol below show, companies are making dubious decisions about content that is unlikely to be harmful to minors. This is problematic for free speech in the UK.

As the UN Special Rapporteur’s 2014 free speech report put it:

“The Internet has dramatically improved the ability of children and adults in all regions of the world to communicate quickly and cheaply. It is therefore an important vehicle for children to exercise their right to freedom of expression and can serve as a tool to help children claim their other rights, including the right to education, freedom of association and full participation in social, cultural and political life. It is also essential for the evolution of an open and democratic society, which requires the engagement of all citizens, including children. The potential risks associated with children accessing the Internet, however, also feature prominently in debates about its regulation, with protection policies tending to focus exclusively on the risks posed by the Internet and neglecting its potential to empower children.” 56

Children aged 16-18 are already considered mature for some legal purposes. At this age, they can legally have sex, work, or join the army. It is therefore clear that it is reasonable to treat members of this under 18 age group differently, rather than to subject them to a one-size-fits-all approach to filtering which imposes the same level of content blocking on all who fall into the wide “under 18” age group.

Other harms caused by filters

Filters contribute to an increasing set of restrictions on communications and access to information for children – both at home and in school. There are also other factors in this, from the rise of technologies that tag children, to government programmes like Prevent. As a result, we are seeing the unprecedented monitoring of the UK’s young population. This has implications for the free speech and privacy rights of the next generation. We do not yet know the full extent of the effects of this, which could see a rise in self- censorship or the increased use of circumvention tools.

In the debate about harmful content online, children’s voices are not being heard. As a report by the Child Rights International Network noted: “given the gulf that exists between adults’ and children’s experience of and the ways they use technology, it is all the more important that children are involved in developing any age-labelling systems.”57

Of course, impacts such of those we have illustrated here can be argued to be anecdotal and unrepresentative. Individual problems can be fixed and dismissed. For this reason, we have tried to quantify the harms in the research we present in Part 2 and 3.

The legal basis for content filtering

There has never been a legal obligation for companies to provide filters. In the case of ISPs, the companies voluntarily agreed to create parental controls after private meetings with government officials and policy makers. Parliament did not pass any legislation.

In November 2015, the European Union agreed the final version of a regulation on net neutrality, known as the Open Internet Regulations, which state that providers of Internet services: “should treat all traffic equally, without discrimination, restriction or interference, independently of its sender or receiver, content, application or service, or terminal equipment”.58

The regulation aims to stop ISPs from acting in an uncompetitive way, and intervening against particular parties or companies, to keep the economy as innovative as possible. Filters have clear impacts here. By blocking small alcohol providers such as corner shops or wine merchants, but not others, such as large supermarkets. The UK’s filtering arrangements may be unlawful because they do not treat all traffic equally; the regulation stipulates that only illegal content can be lawfully blocked.

The Regulation also attempts to provide legal balance around blocking, allowing a member state to put in place laws that require ISPs to block sites, as an exemption to the general prohibition on blocking. Such legislation would need to show respect for proportionality and the rights of the blocked site and its users.59 These requirements are not spelled out in the regulation, but might for instance include the right to be notified and to be able to stop an incorrect block.

The current blocking arrangements, which are opaque, could not be justified as proportionate, clearly codified or respecting legal process for all parties.

After the regulation was agreed by the European Parliament, the then Prime Minister, David Cameron told the House of Commons that the Government would legislate to allow filtering to continue in the UK. The following clause in the Digital Economy Act 2017 was included for this purpose:

“A provider of an Internet access service to an end-user may prevent or restrict access on the service to information, content, applications or services, for child protection or other purposes, if the action is in accordance with the terms on which the end-user uses the service.” 60

In a debate on the Digital Economy Act (DEA) 2017 amendment, Baroness Jones appeared to question the efficacy of this amendment:

“To some extent we are taking all of this on trust. While it would be easy to demand more evidence, I accept that it would not help the case of those committed to family-friendly filters—I suspect that the more we probe, the more the robustness of the proposals before us could unravel. We support the intent behind these amendments and it is certainly not our intention to bring them into question in any way.” 61

As we understand it, the amendment to the DEA 2017 is not sufficient to make ISP filtering legal. In a written question from Julia Reda MEP on the topic of the legality of ISP filters, European Commissioner Andrus Ansip, who leads the project team for the Digital Single Market62, responded that:

“the provision of an Internet access service whose terms of service restrict access to specific information, content, applications or services, or categories thereof, result in limited access to the Internet and as such would be contrary to Article 3 of the Regulation. This is further explained in paragraph 17 of the BEREC (Body of European Regulators for Electronic Communications) guidelines. Whether the end-user has the ability to disable that restriction would not affect the above assessment.” 63

The BEREC Guidelines state:

“BEREC understands a sub-Internet service to be a service which restricts access to services or applications (e.g. banning the use of VoIP or video streaming) or enables access to only a pre-defined part of the Internet (e.g. access only to particular websites). NRAs should take into account the fact that an ISP could easily circumvent the Regulation by providing such sub-Internet offers. These services should therefore be considered to be in the scope of the Regulation and the fact that they provide a limited access to the Internet should constitute an infringement of Articles 3(1), 3(2) and 3(3) of the Regulation.” 64

The Regulation will apply until the UK leaves the European Union, but may continue have legal effect beyond this departure depending on the terms of deals or agreements which are negotiated with regard to the UK’s future relationship with the Union.

We would like Ofcom to clarify the legal status and basis for adult content filtering, and provide guidance to companies who might be in breach of the law.

Filtering by ISPs is problematic for many reasons including:

-

Network level filters are ‘one size fits all’ and will never be suitable for everyone in a household. Adults may dislike the intrusion of a filter, while small children may need a lot of content to be restricted. As we discussed above, older teens in the 16-18 age bracket have different needs with regard to being protected from particular content than younger children.

-

Network level filters may give a false sense of security to families who mistakenly believe ISP claims that they provide peace of mind.

-

Several ISPs have trouble operating network filters. We have observed filters disabling themselves for an ongoing period of months at the test line we use at TalkTalk. At Plusnet we were unable to get filters to work at all on at two lines we tried. The technical difficulty of operating content filters at network level should not be underestimated.

-

Filters depend on the misuse of the Domain Name System (DNS) by ‘forging’ DNS results, sometimes including interfering with DNS results from third-party services. The interaction between filters and DNS systems may cause reluctance at ISPs to adopt DNS security technologies, which would improve Internet safety including protection against man in the middle attacks.65

-

Filtering offers a means of interfering with competitors’ markets, while claiming that this is done by user choice.

-

Filtering prevents access to legitimate content in an arbitrary manner.

-

In general, mass adoption of filters will be contributing to a trend towards use of VPNs, proxies and other technologies to enhance users’ privacy and access to content. Over time, this reduces the effectiveness of targeted blocking measures imposed by law, such as court orders to block copyright infringing websites, or sites that do not provide age verification for adult content.

These issues will have been in the minds of European Union legislators when drafting the Open Internet Regulations. ISPs should ideally point customers to third party tools, rather than operating network-level filters which are in conflict with their basic business of providing Internet access.

Error correction

Correcting errors in filtering systems is not necessarily easy, and was not prioritised by the Government alongside introduction of filters. Some MPs such as Claire Perry initially denied that there would be a problem with errors at all. 66

O2 are the only provider to have a URL checker.67 This tool ended up being disabled for over a year, beginning in late 2013, following its use by journalists.68 Each ISP provides an email address for reports of overblocking, but ISPs do not accept bulk or automated enquiries.

Internet Matters is a not-for-profit organisation, backed by BT, Sky, TalkTalk and Virgin Media, which promotes online safety for children. Website owners can email report@internetmatters.org to find out if their site is blocked by the four ISPs. It should also be noted that this is only available to website owners, not the wider public. Owners can ask whether a particular site might be blocked.

ORG feels these solutions inadequate so we run our own system, Blocked. It allows end users to test to see whether sites are blocked by filters on all the major broadband and mobile ISPs. ORG would like to work with providers, Internet Matters and the BBFC to help reduce the censorship caused by filters. We hope that Internet Matters will promote the Blocked tool to help people find out instantly whether a site is blocked and if they need to contact an ISP.

PART TWO: Understanding our Blocked.org.uk data

Background to Blocked.org.uk

ORG has collaborated with volunteers to develop a tool that tests whether sites are blocked by UK ISPs. The tool is located at Blocked.org.uk, and was first launched in July 2014. The system allows anyone to check whether a website is being blocked by the filters of major home and mobile ISPs in the UK. Through this we have gained valuable insight about the extent of incorrect content decisions based on filtering. The data we have collected is examined in Part 3 of this report, and laid out in summary tables in Appendix A.

Site owners and users can visit the Blocked tool and enter a domain or URL to check. The system then passes this to a series of probes, some of which are connected to fixed broadband lines, and others to mobile data connections. We have an unfiltered and a filtered line for each ISP. The probes request the URL via the ISP to which they are connected and report the results. The results of the tests appear to the user of the Blocked tool.

The tool also allow users to search blocked sites by category and keyword, and to make it easier to report these errors to ISPs and mobile providers. In 2018, we began to route ISP replies to users’ reports through the tool, allowing us track the responses that ISPs sent back to Blocked users. This makes it possible to view an ISP’s stated reasoning when they refuse to unblock a particular site, and also view how long it takes for a user to receive a reply, if at all.

When we first launched Blocked, ORG discovered that around 10% of the Alexa top 100,000 websites were blocked by the default settings of at least one filtering system. This rose to 20%, or one in five, websites when strict settings were applied. We found lots of websites are erroneously blocked, and that different sites appear to be blocked by different ISPs, demonstrating a lack of common consensus regarding what material should be blocked.

We have run tests on over 35 million unique domains across 15 ISPs and mobile providers and found over 760,000 currently blocked domains. We have added real-time updates on the Blocked tool’s results page, and expanded the tool to also detect court-ordered blocks for sites which host copyright infringing material.69

Blocked has helped website owners find out their websites are blocked. Many did not suspect that this was happening because the websites they run pose no harm to children at all. As Amy Leatherbarrow, who ran a women’s clothing company said:

“Without www.blocked.org and your help, I would not have known how to go about getting this problem resolved”.70

Keyword search has been particularly helpful for us to discover wrongfully blocked sites in certain categories, such as LGBT, counselling and business websites. We are able to use keywords to create automatically- populated lists of blocked domains which are likely to be mistakenly blocked, helping volunteers to focus their efforts to report these sites specifically.71

The reports filed and the responses given, as well as the search facility, have given us the ability to present more detailed information to show how filters restrict content and make mistakes.

Aims of this research

This research seeks to understand the nature of the errors with Internet filters, and the potential damage they may cause. We believe filtering is demonstrably error prone. It should be clear that it is important to limit filtering to where necessary, for instance to help a particular child manage their Internet access. If it is the case that filters necessarily cause some damage by unavoidably overblocking, then this damage from filters can at least be limited if the use of filters is encouraged only in more targeted contexts, for instance on devices belonging to individual children, and by ensuring that adults agree to the use of filters before they are applied. It is also important to know what kinds of mistakes are made, and how these are handled.

We chose to take a closer look at the problems arising from filters on mobile networks and fixed-line ISPs. The former are ‘default on’, while ISP filters are targeted at the whole home network. Both are in our view encouraging far more filtering than is ideal, as they are targeting adults who are able to manage their content choices.

The research has attempted to understand, from our data:

-

What kind of sites are reported as blocked?

-

Who reports sites?

-

What damage is cited by users making reports?

-

How mobile and fixed ISPs respond to reports?

Our dataset reflects reports we have handled. We are unable to examine what happens when people choose not to report mistakes, or report them directly to ISPs. For a report to reach us, the following steps typically take place occur:

-

A block is noticed by a site owner or user or is reported to them;

-

They would need to investigate how to remove the block; and

-

They would need to find our website for instance via search and choose to use it rather than an ISP’s own systems.

It is reasonable to assume that Blocked.org.uk would be used mostly by non-customers of ISPs who decide not to rely on reporting directly to a network, nor by using the reporting facility offered to site owners by Internet Matters. This may well be a small fraction of such reports.

Research methodology

We have attempted to understand what sites are blocked by examining our indexing data through searches and classifying reports made through the Blocked tool. Additionally, some interviews with affected website owners have been conducted.

User reports

The unblock requests which users submit via the Blocked tool are a rich source of information about filtering blocks and their direct impacts. For reports, we have:

-

Classified each report according to the reporter’s affiliation to the site in question (owner, user, web developer, etc).

-

Checked whether reports appear to be violations of the ISP’s blocking policy.

-

Categorised the damage cited by users who have reported blocked sites.

-

Categorised the site against our own typology.

It should be noted that users fall broadly into four types:

-

People with a specific complaint, wishing to report a wrongful block they are already aware of.

-

People worried that a site may be blocked, and checking if it is.

-

People loosely affiliated with ORG, who are aware of issues around content filtering, and wish to make reports to ISPs about particularly problematic blocks. The main Blocked.org.uk site presents a selection of blocked sites for users in this category to check and report if necessary.

-

People affiliated with a particular campaign or industry that are aware of a specific impact being caused by filters. This has included some local branches of the Campaign for Real Ale, companies selling fireworks, and shooting ranges.

Our dataset reflects the mistakes and harm suspected and uncovered by people motivated to get them rectified. We would not characterise it as a completely ‘objective’ measure of harm, nor necessarily an indication of where filters are causing the most harm, although it may provide us with a strong indication. It can how show that harms exist and highlight examples of these. Reports from website owners also give us an indication of who is finding out about filtering and is most concerned to rectify wrongful blocks.

Search

During the lifetime of the project, Blocked.org.uk has tested over 35 million unique domains for potential blocks. Where blocks are detected, information including title and metadata description are stored in the Blocked database. This data is made searchable on Blocked.org.uk site, to find possible erroneous blocks. We compile lists of search results which are particularly revealing, and some of these of results are prioritised for users to report. For instance, we prioritised local city results, charities, advice lines and counselling websites for users to review. However, our search is limited by the fact that we cannot regularly re-index all sites, so will not catch all blocks even for the URLs we have tested at least once.

Interviews

ORG and Top10VPN have spoken where possible to site owners about their experiences. These have been identified through their reports via Blocked.org.uk, so we are able to show when and where their site was blocked.

ISP response statistics

We evaluate the performance of ISPs by checking how rapidly and effectively they respond to user requests submitted via the Blocked tool.

Reports submitted via Blocked.org.uk

If filters work as intended, only sites clearly unsuitable for children would be blocked. We have found this is not the case. Many of the blocked sites under these filters do not contain adult content and in fact belong to charities, churches, counselling services, and mental health support organisations.

A small selection of sites which we feel have been inappropriately blocked can be found below. The rest of this report will focus on some of the specific issues we have found during our research.

Examples of Incorrectly Blocked Sites

Beam – Helping the Homeless

Beam.org is a site belonging to the Beam organisation.72 Beam is a service that raises funds to help support homeless people into training and work. The service has been featured as a success story in many national newspapers, and also receives financial support from the Mayor of London.

CARIS Islington – Bereavement Counselling and Cold Weather Night Shelter

CARIS is an Islington-based charity which runs two projects – a Bereavement Counselling Service for children and adults, and a cold weather night shelter for the homeless. CARIS is a site which we found to be inexplicably blocked by some Internet Service Providers under the filtering system.73

Welcome Church

The Welcome Church is a church based in Woking, UK.74 It describes itself as a “diverse community with activities and groups for all ages” and notes that it has “a vibrant youth and children’s work, so your whole family can feel welcome”.

Filters blocking the sites listed is problematic, and not just for Internet users unable to access Internet content Groups like these, as well as businesses who rely on their websites for income might find a large segment of the population blocked from accessing their sites. Some site owners and business owners may be unaware this is happening.

Using the tool, many businesses were able to discover or confirm that their site is blocked for certain users. The tool allows site owners or potential site users to submit an “Unblock Request”, which asks an ISP to review a blocked site in accordance with their policy and ensure that their categorisation of it is correct. We find that most ISPs will take action to unblock a wrongfully-blocked site when they are alerted to it. However, it is unacceptable that, without our tool, many site owners may have been losing out on potential site visitors and customers while being completely unaware of the fact.

Manually reviewing every site on the Internet would be time consuming and expensive, so adult content filtering makes heavy use of automated systems. These automated systems may look for particular keywords or content found on web pages and use a set of rules to decide whether a site should be blocked or allowed. Using an automated approach like this can lead to false positives and unfair or unreasonable blocks. This is because the automated systems do not have as much information as human reviewers with regard to the context or importance of content on webpages.

When considering what to block, automated systems need to decide whether they prefer to block too little or too much. By making the criteria for blocking tighter and forcing stricter matches, they can reduce overblocking. This increases the likelihood that they will ‘underblock’ and leave sites available which are unsuitable for children. As the products are aimed at preventing children’s access to such sites, they are likely to prefer to match against loose criteria, meaning more ‘overblocking’ mistakes are made. This decreases the likelihood of children encountering material that has been chosen for blocking, but has detrimental effects elsewhere.

Even if a site is discovered to be wrongfully blocked and is reported to ISPs using our Blocked tool, some bias may still remain. At this point, a human reviewer is expected to assess the site in accordance with the ISP’s blocking policies and determine whether to lift the block.

We have found that blocks are often lifted where they are clearly in error, but some blocks remain even after a review. The opinions of individual reviewers can differ, and may influence the re-categorisation process. For example, we examined the case of a site belonging to Feeld – a dating app for polyamorous couples.75 The main webpage for this app had been filtered, and after receiving a request via our Blocked tool to review the site, one ISP continued to insist that it should be categorised as “pornography” because of the fact it targeted “alternative sexual preferences”. The site or app in question did not contain any pornographic content. In any case, doing so would violated the app marketplace terms of service for both the Apple App Store and Google Play Store.

We experienced issues with some ISPs replying unreliably to reports of sites which are inappropriately blocked. Blocked.org.uk allows users to request ISPs unblock particular sites if a user feels they have been blocked in error. Some ISPs respond to this process by unblocking sites within a reasonable timeframe. But we have experienced issues with multiple providers being slow to reply, or not replying at all. Some providers send automated responses back to our tool, indicating that they will be in touch about a particular unblock request within a few days, and then we never hear from them again.

We have attempted to quantify these issues in our research, presented in Part 3.

Error rates and numbers of likely mistakes

It is hard to calculate the number of erroneous blocks and the number of vendors making mistakes compounds the problem for website owners. They may be blocked by an error at multiple opaque blocking systems, which they often cannot check.

In meetings with us, ISPs have asserted a 0.01% error rate in classifying whether sites should be blocked. Using this assumption, we can make an estimate of the total number of mistakes made, for instance on the four major fixed-line networks:76

| Sites Tested | Number of blocks | Percentage of sites tested which are blocked | Estimate of erroneous site classifications using ISP figure | |

|---|---|---|---|---|

| BT | 23,315,636 | 284,242 | 1.22% | 2332 |

| Sky | 23,213,914 | 233,248 | 1% | 2321 |

| TalkTalk | 22,573,610 | 311,238 | 1.38% | 2257 |

| Virgin Media | 23,252,676 | 206,116 | 0.89% | 2325 |

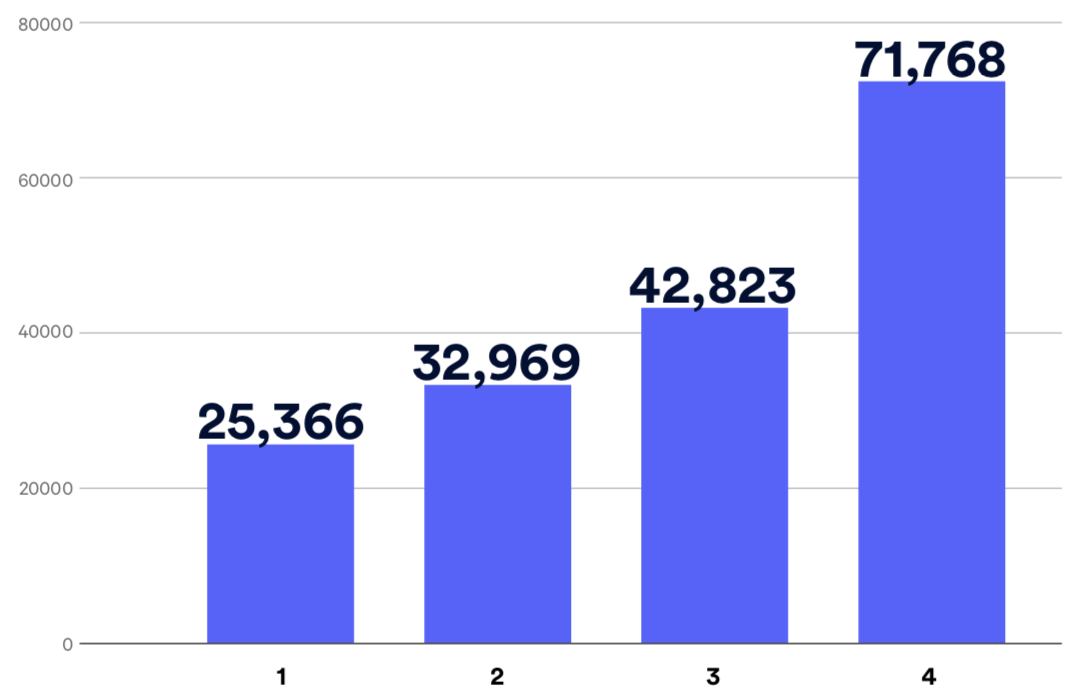

From our dataset we have also made a comparison of consistency based on sites which have been tested on all four mobile networks:

| Number of mobile networks blocking a URL | Domains or URLs |

|---|---|

| 1 | 25,492 |

| 2 | 33,042 |

| 3 | 43,055 |

| 4 | 72,335 |

More than half of the blocks implemented by mobile filters are not present on all networks. This is despite the fact that mobile networks have agreed on the BBFC’s unified Classification Framework drafted, which defines what types of content should be blocked. By this measure, over half of the blocks on mobile networks may regarded as reflecting some kind of error, which may be either underblocking or overblocking content.

PART THREE: Research Findings

Problematic categories of blocks

Automated filters are blunt tools. The automation is required because it would be a huge task to manually classify every website on the Internet. But automated filters are based on sets of rules and often wrongly classify content as they lack a human understanding of context or nuance. In the course of our research with the Blocked tool, we have encountered a number of recurring themes among sites which have been wrongfully blocked, which we will explore in this section.

Problematic blocks largely fall into one or more of three main categories:

-

Content which has been misclassified;

-

Blocks which break the Internet at a technical level (for instance, blocking content distribution sites, application programming interfaces (APIs), or servers used by mobile apps); or

-

Blocks which are based on categories which are overbroad and therefore end up applying to material which is not likely to be harmful to children.

For the purposes of analysing some of the most frequently recurring themes we have seen among blocked sites, this section will isolate some examples of content which fall into the categories identified above. It is important to note that there are limitations to our analysis, as we are unable to reverse-engineer the inner workings of web filtering systems. Our methodology is focused mainly on identifying common patterns or shared characteristics among sites which we have identified as being filtered. At the time of writing, the range of domains which we have proactively analysed using the Blocked tool is slightly UK-centric, and we expect to see further patterns emerge as more domains are processed and added to the database.77

The table below identifies some of the commonly recurring categories of domain which have been submitted to ISPs on behalf of users of the Blocked tool, and the number of domains which fall into each category. We have compiled this data by manually reviewing and applying a category which describes the main content of each submitted for unblocking via the Blocked tool. We analysed a total of 857 unique domains submitted by Blocked.org.uk users since July 2017. An expanded version of this table with the full range of categories we have manually assigned to reports is available in Appendix A of this document.

| Category for Submitted Reports | Number of reported domains |

|---|---|

| Advice sites (drugs, alcohol, abuse) | 52 |

| Alcohol-related (non-sales) sites | 36 |

| Building and building supplies | 32 |

| CBD oils, CBD-related, and hemp products | 21 |

| Charities and non-profit organisations | 68 |

| Counselling, support, and mental health | 122 |

| LGBTQ+ sites | 40 |

| Religious sites | 21 |

| Weddings and wedding photographers | 44 |

To further analyse some of the recurring themes which we have encountered among blocked domains, we also expanded the scope of our analysis to include blocked domains which were in the Blocked.org.uk database but which had not necessarily yet been reported by users of the site. To quantify this data, we used keyword searches of Blocked.org.uk’s database of blocked domains to identify sites which matched keywords relating to the various categories of frequent block we had seen. Through this process, we produced lists of sites in those categories which we feel are likely to be wrongfully blocked. While the number of user-reported URLs in particular categories is often relatively small, the lists of sites produced by keyword searches of our database offers a view of the scale and unpredictability of the level of overblocking which may be happening for specific types of site. A version of this table with footnote sources for the lists used to compile the data is available in Appendix A of this report.

Table A – Keyword list categories

| Sites relating to keywords related to | Number of blocked domains identified through search | Number of domains still blocked | Current ISP blocks |

|---|---|---|---|

| Addiction, substance abuse support sites | 185 | 91 | 287 |

| Charities and non-profit organisations | 98 | 17 | 24 |

| Counselling, support, and mental health | 112 | 77 | 191 |

| Domestic violence and sexual abuse support | 59 | 14 | 42 |

| LGBTQ+ sites | 114 | 39 | 121 |

| School websites | 161 | 23 | 52 |

Table A.2 Verified search results, UK sites only

| Sites relating to keywords related to | Number of blocked domains identified through search | Number of domains still blocked | Current ISP blocks |

|---|---|---|---|

| Addiction, substance abuse support sites | 35 | 14 | 48 |

| Charities and non-profit organisations | 91 | 17 | 24 |

| Counselling, support, and mental health | 104 | 70 | 177 |

| Domestic violence and sexual abuse support | 7 | 3 | 11 |

| LGBTQ+ sites | 27 | 7 | 25 |

| School websites | 143 | 13 | 28 |

Table A.3 Unverified search results

| Sites relating to keywords related to | Number of blocked domains identified through search | Number of domains still blocked | Current ISP blocks |

|---|---|---|---|

| Building and building supplies | 67 | 26 | 64 |

| CBD oils, CBD-related, and hemp products | 307 | 220 | 1081 |

| Drainage and drain unblocking services | 107 | 3 | 3 |

| Photography sites | 1858 | 732 | 2109 |

| Religious (Christian) sites | 137 | 54 | 147 |

| Weddings and wedding photographers | 4506 | 1718 | 3739 |

| VPN sites | 404 | 345 | 1719 |

Table A.4 Unverified search results, .uk domains only

| Sites relating to keywords related to | Number of blocked domains identified through search | Number of domains still blocked | Current ISP blocks |

|---|---|---|---|

| Building and building supplies | 59 | 23 | 48 |

| CBD oils, CBD-related, and hemp products | 112 | 91 | 510 |

| Drainage and drain unblocking services | 99 | 2 | 2 |

| Photography sites | 1372 | 514 | 1348 |

| Religious (Christian) sites | 37 | 9 | 20 |

| Weddings and wedding photographers | 4012 | 1480 | 3154 |

| VPN sites | 23 | 15 | 55 |

These tables are intended to illustrate how easy it is to find likely or actual errors in blocking systems. The data used in the tables above are intended to display particular patterns we have observed among blocked sites. They cannot be said to be inclusive of “all websites” as the Blocked tool is limited to testing only domains which have been submitted by users, or indexed for search. Figures based on user-submitted reports cannot perfectly reflect the entire landscape of wrongful or overzealous blocking by content filters, as users submitting reports are likely to proactively search for and report sites in categories which they already data is a reliable illustration of some of the issues that are arising commonly with the misclassification of particular categories of website.

The work that would be required to remove errors from ISPs’ filtering systems would be very considerable, even for those areas we have been able to identify. The search terms we have used are not exhaustive and will not catch all relevant content, as the Blocked project’s site indexing is limited. Our keyword searches are further limited by the fact that we have to run exclusion terms to filter out the most likely adult content in order to identify just those domains that are most likely to be mistakenly blocked.

Content misclassification

As filters misclassify content so regularly, we have logged a large number of examples of misclassification over the lifetime of the Blocked project. Due to the automated nature of these filters, there are a number of repeated misclassifications we have seen which are common and recur on a regular basis. This section will explore some of the more common misclassifications we encountered.

In many cases, misclassification does not have an obvious reason. It may be that filters classify some sites according to their hosting provider, for instance, assuming that all sites sharing a particular IP address are likely to be pornography, even when the sites are in fact radically different.

Domestic violence and sexual abuse support networks

One of the most obvious and egregious misclassification errors is the blocking of sites which offer information and support to survivors of rape and sexual assault.78 Such sites, understandably, contain frequent uses of words and terminology which could be interpreted as sexual or pornographic in nature by a blunt filter which does not have an appropriate understanding of the context in which language is used.

The filtering of such sites is extremely problematic, as it may lead to vulnerable or at-risk people being restricted from accessing vital safety information or emotional support resources. The damage caused by this type of filtering is potentially very large, and ISPs should therefore take proactive steps to ensure that their filtering systems are free of examples of sites which fit into these categories.

School websites

Despite being demonstrably appropriate for children, school websites do not escape being caught up by overzealous adult content filters. Our research produced a non-exhaustive list of schools and school-related websites which were blocked, with at least 13 URLs in this category blocked by at least one ISP at the time of writing.79